|

As part of a Hackathon at work, I decided to put my Hololens app development skills to use and create a full fledged holographic application. I feel that designing a game on any platform requires not only the knowledge of developing on that platform, but also stresses the application's UI, functionality, and overall user engagement. And what better game than the world famous tile matching puzzle Tetris!

I have decided to make this project completely open source and available on GitHub here Here's a YouTube link with short video demo of the app. I'd love feedback and comments!

1 Comment

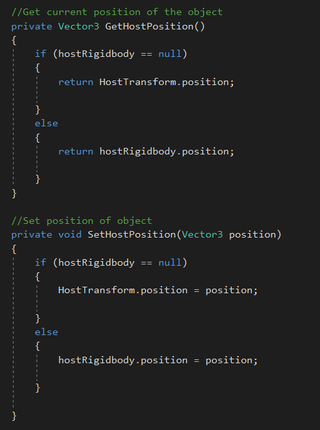

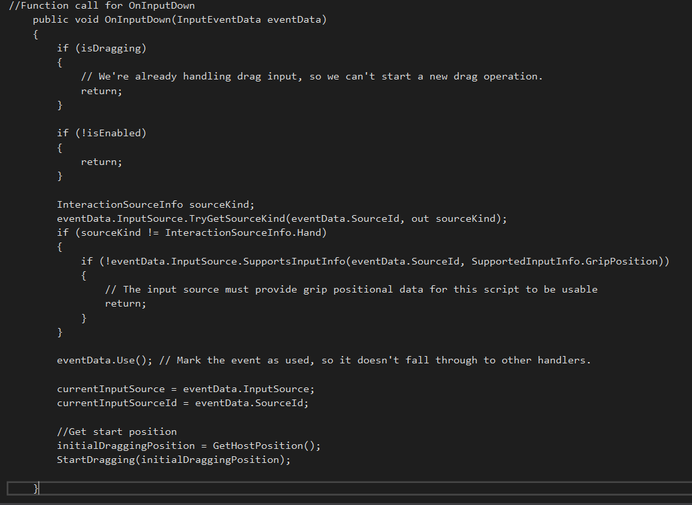

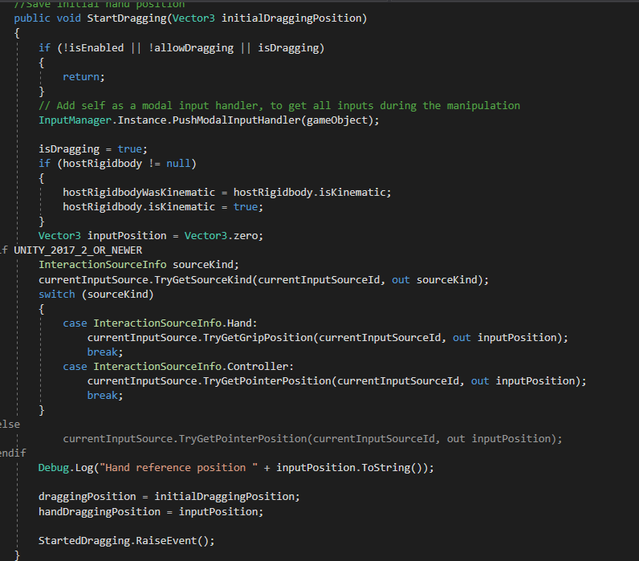

It's been radio silence since my last post (I know) but work has kept me super busy! I'm back with what I think was my biggest challenge in developing applications using Hololens - moving holograms using gestures. Granted, the new MRTK has a HandDraggable script (renamed DragAndDropHandler) located here, but I found this script a little complicated for simpler Hololens applications. The script relies on both the camera and hand position and very quickly seems to "lose" the hologram out in the weeds if the user either moves his or her hand or head fast enough. For my requirements (creating HoloTetris), I needed a simpler script where the reliance is on the user's hand position which is monitored on every frame rate once the hologram is engaged, allowing for a smoother movement of holograms across the screen. I named this script ObjectControl which inherits IInputHandler and IFocusable Some of the most useful scripts involve getting and setting the object's position - very useful when engaging and moving the object. Code is provided on GitHub here and some snippets and highlights are explained below A couple of useful boolean values isDragging and isEnabled are to keep track of the current status of the object.

IInputHandler inherits the OnInputDown and OnInputUp functions which commence and end the object dragging action. OnInputDown records the event as used (so that the dragging action doesn't get interrupted by another user action until completion). StartDragging is another function in MRTK which determines the source that initiated the dragging gesture (hand or controller). The MRTK version of StartDragging also looks for the camera position and the pivot position of the hand. I stripped this script down to simply record the object position at the start of dragging. UpdateDragging is another function which is called during Update. Similar to StartDragging, UpdateDragging continues to monitor and update the position of the object based on the hand's current position. The object's new position is calculated by using the Vector3.Slerp function which spherically interpolates between the current object's and hand's position to move the object to the new position Vector3 draggedPosition = HostTransform.position + (handDistance * DistanceScale); Vector3 newPosition = Vector3.Slerp(HostTransform.position, draggedPosition, PositionLerpSpeed); SetHostPosition(newPosition) Finally, a few functions to gracefully handle exiting the dragging motion, once the user releases the drag gesture. This invokes the OnInputUp inherited funciton, where I release the event (eventData.Use()) before calling a StopDragging function to fix the final position of the hologram The previous post focused on creating a simple text popup window based on user interactions. In this post, we take things one step further by creating UIs which can include a combination of text, images, buttons, scrollbars, etc. for a larger interaction set presented to the user. We will be utilizing the Canvas game object in Unity to enable these features.

After a long hiatus, I'm back with more Hololens development!

In this tutorial, we will talk about how to add tool tips to holographic objects when you gaze at them. In many scenarios there may be multiple voice and gesture commands that can be used to interact with the object. With the aid of tool tips or popup menus it becomes much easier to make your holographic experiences more user friendly. Short note but exciting news! The Hololens was used during the making of the new Steven Spielberg movie, Ready Player One. A number of VR and MR headsets were used to recreate the Oasis network (really well described in the book as well). I'm stoked to read this, super excited to watch the movie, and highly recommend the 2011 book by Ernest Cline as well.

In this post, we will go over how to implement and support airtap gestures for your application. The airtap gesture is the simplest select gesture performed on the Hololens. More on gestures can be found here.

Another way to interact with your holograms is via voice commands. Hololens supports a variety of voice commands with the help of Cortana. Apart from the standard commands, you can program your own keywords to specify certain interactions with holographic objects.

Now that we have successfully created our first Hololens application, we need to load it onto the Hololens and try it out real time. There are various steps in this process, starting from applying settings in Unity to building the application onto the device using Visual Studio.

One of the most elegant features of the Hololens is that, basically, you are the cursor. Looking at a holographic object is equivalent to moving your mouse cursor to point.

In this post, we will develop a simple script that utilizes the Gaze feature of the Hololens to perform certain actions on objects in the scene that we can interact with. Now that the project environment is ready for holographic development, we can design our first hologram. We will start out with something really simple and aim to build from this.

|

Archives

December 2018

Categories

|

RSS Feed

RSS Feed